The Aarhus Summer School on Learning Theory brings together top international PhD students to educate them on fundamental topics in theory of machine learning. The summer school takes place in beautiful Aarhus, Denmark. Aarhus is often mentioned as one of the happiest cities in the world and a hidden gem for travelers. This makes for a relaxing and inspiring environment for excursions, discussions, and collaborations.

Shai Ben-David

Amin Karbasi

Jessica Sorrell

Amir Yehudayoff

Nikita Zhivotovskiy

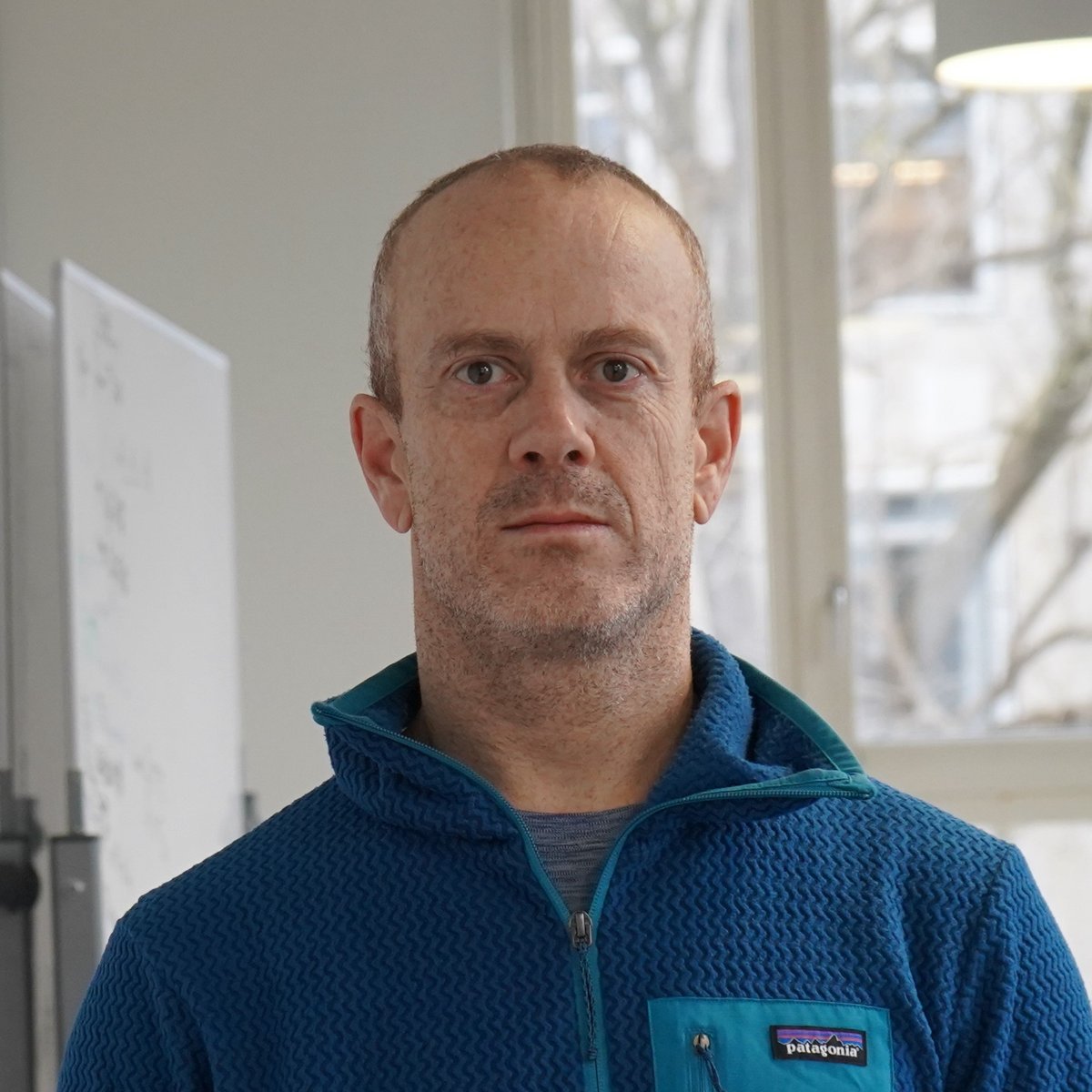

Shai Ben-David

Prof Ben-David research interests span a range of topics in computer science theory.

He has been working on a wide range to topic including logic, theory of distributed computation and computational complexity. In recent years his focus turned to machine learning theory. Among his notable contribution in that field are pioneering steps in the analysis of domain adaptation, of learnability of real valued functions, and of change detection in streaming data. In the domain of unsupervised learning Shai has made fundamental contributions to the theory of clustering (developing tools for guiding users in picking algorithms to match their domain needs) and distribution learning. He has also published seminal works on average case complexity, competitive analysis and alternatives to worst-case complexity.

Prof Ben-David’s papers have won various awards, most recently, Best Paper awards in NeurIPS 2018 and in ALT 2023.

Shai earned his PhD in mathematics from the Hebrew University in Jerusalem and has been a professor of computer science at the Technion (Israel Institute of Technology). Over the years, he has held visiting faculty positions at the Australian National University, Cornell University, ETH Zurich, TTI Chicago and the Simons institute at Berkeley.

Since 2004 Shai is a professor at the David Cheriton school of computer science at U Waterloo, and since 2019 a faculty member at the Vector Institute, Toronto. He is also a Canada CIFAR AI chair, a University research chair at U waterloo and a Fellow of the ACM.

Highlights:

President of the Association for Computational Learning Theory (2009-2012).

Program chair for the major machine learning theory conferences (COLT and ALT, and area chair for ICML, NIPS and AISTATS).

Co-authored the textbook "Understanding machine learning: from theory to algorithms".

Amin Karbasi

Amin Karbasi is currently an associate professor of Electrical Engineering, Computer Science, and Statistics & Data Science at Yale University. He is also a research staff scientist at Google NY. He has been the recipient of the National Science Foundation (NSF) Career Award, Office of Naval Research (ONR) Young Investigator Award, Air Force Office of Scientific Research (AFOSR) Young Investigator Award, DARPA Young Faculty Award, National Academy of Engineering Grainger Award, Bell Lab Prize, Amazon Research Award, Google Faculty Research Award, Microsoft Azure Research Award, Simons Research Fellowship, and ETH Research Fellowship. His work has also been recognized with a number of paper awards, including Medical Image Computing and Computer Assisted Interventions Conference (MICCAI) 2017, Facebook MAIN Award from Montreal Artificial Intelligence and Neuroscience Conference 2018, International Conference on Artificial Intelligence and Statistics (AISTAT) 2015, IEEE ComSoc Data Storage 2013, International Conference on Acoustics, Speech, and Signal Processing (ICASSP) 2011, ACM SIGMETRICS 2010, and IEEE International Symposium on Information Theory (ISIT) 2010 (runner-up). His Ph.D. thesis received the Patrick Denantes Memorial Prize 2013 from the School of Computer and Communication Sciences at EPFL, Switzerland.

Jessica Sorrell

Jessica Sorrell is an Assistant Professor in the Department of Computer Science at Johns Hopkins University. Previously, she was a postdoc at the University of Pennsylvania, working with Aaron Roth and Michael Kearns. She completed her PhD at the University of California San Diego, where she was advised by Daniele Micciancio and Russell Impagliazzo. She is broadly interested in the theoretical foundations of machine learning, particularly questions related to replicability, privacy, fairness, and robustness. She also works on problems in lattice-based cryptography, with a focus on secure computation.

Amir Yehudayoff

Amir received his Ph.D. from the Weizmann Institute of Science and was a two-year member at the Institute for Advanced Study in Princeton. He is currently a professor in the Department of Computer Science in the University of Copenhagen, and in the Department of Mathematics at the Technion. His main research area is theoretical computer science, with a recent focus on the theory of machine learning.

Nikita Zhivotovskiy

Nikita Zhivotovskiy is an Assistant Professor in the Department of Statistics at the University of California Berkeley. He previously held postdoctoral positions at ETH Zürich in the department of mathematics hosted by Afonso Bandeira, and at Google Research, Zürich hosted by Olivier Bousquet. He also spent time at the Technion I.I.T. mathematics department hosted by Shahar Mendelson. Nikita completed his thesis at Moscow Institute of Physics and Technology under the guidance of Vladimir Spokoiny and Konstantin Vorontsov.

Shai Ben-David

I will devote three of my lectures to an introduction to the theory of machine learning and the fundamental statistical learning generalization results. My remaining lectures will cover three concrete and more advanced topics: Clustering, unsupervised learning of probability distributions and fairness for machine-learning-based decision making.

Amin Karbasi

Combinatorial optimization: Many scientific and engineering models feature inherently discrete decision variables—from phrases in a corpus to objects in an image. The study of how to make near-optimal decisions from a massive pool of possibilities is at the heart of combinatorial optimization. Many of these problems are notoriously hard, and even those that are theoretically tractable may not scale to large instances. However, the problems of practical interest are often much more well-behaved and possess extra structures that allow them to be amenable to exact or approximate optimization techniques. Just as convexity has been a celebrated and well-studied condition under which continuous optimization is tractable, submodularity is a condition for which discrete objectives may be optimized.

Continuous optimization: Continuous methods have played a celebrated role in submodular optimization, often providing the tightest approximation guarantees. We discuss how first order methods can help us solve combinatorial problems in modern machine learning applications.

Jessica Sorrell

Replicability of outcomes in scientific research is a necessary condition to ensure that the conclusions of the studies reflect inherent properties of the underlying population and are not an artifact of the methods that scientists used or the random sample of the population that the study was conducted on. In its simplest form, it requires that if two different groups of researchers carry out an experiment using the same methodologies but different samples of the same population, it better be the case that the two outcomes of their studies are statistically indistinguishable. We discuss this notion in the context of machine learning and try to understand for which learning problems statistically indistinguishable learning algorithms exist.

Amir Yehudayoff

Learning entails succinct representation of data. Learning algorithms perform some sort of compression of information. A seminal definition capturing this phenomenon is that of sample compression schemes. The mini-course shall introduce sample compression schemes and survey deep connections to other notions of learning and areas of mathematics.

Nikita Zhitovoskiy

The Exponential Weights (EW) algorithm is a versatile tool with broad utility across statistics and machine learning. Originally developed in information theory, EW has an elegant theoretical framework that tackles many problems. First, we will see how EW can estimate discrete distributions. Next, we will examine its role in ensemble methods for aggregating multiple estimators. Finally, we will discuss EW in logistic and linear regression, where it enables regularization through an online learning approach.

Please note that the application deadline has passed.

Please use the button below and fill out the form. The application deadline is May 1. Acceptance/rejection notifications and payment links will be sent within two weeks of the deadline. Attendance of the summer school, including lunch, coffee, cake, and one dinner, costs EUR 100 to be paid by May 21 to secure the spot. Accommodations and travel are not included.